The Working Group has packed a great deal of wisdom into eight pages. Proposing sensible wisdom as formal guidance to engineers constitutes a major change from risk management as currently practised in many places. If the outcome is successful implementation of the guidance, then that will represent real progress. However, the publication represents a major step that is itself fraught with risk and unintended consequences. This post examines some of them.

Delightfully, it includes the word 'ergonomics', for which many thanks are due to Reg Sell. The particular wording "consider the role that ergonomics can play in mitigating the risk of human error" is a compromise many ergonomists would accept only with considerable reluctance, since it reflects an outdated and negative view of how accidents happen (e.g. see Sidney Dekker's writings). The clause also highlights the difficulties of writing well-intentioned guidance, since there are many sectors of engineering with mandatory (or effectively mandatory) requirements to use (rather than consider) ergonomics. In my experience of talking to engineeers, most of them are blissfully unaware of their obligations e.g. under the Machinery Safety directive.

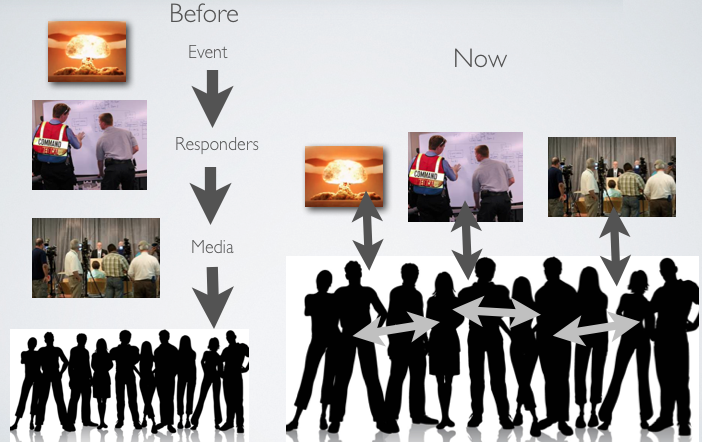

My reading of the scope of the guidance is that it goes well beyond engineering competence thresholds such as E3, C3. Indeed, asking a technically-based engineer to meet these guidelines seems well beyond reasonable for the engineer, for her employer, or for society. It is hard to see 'addressing human, organizational and cultural perspectives' as an engineering competence or responsibility [such perspectives are already in BS31100:2008. It is not obvious why they have been put on the engineer's desk]. Given the engineer also appears to be responsible for monitoring the Twitter feed (as part of principle 6), she is going to be a busy girl. A footnote saying THESE GUIDELINES CAN BE MET ONLY WITH THE FULL INVOLVEMENT OF HUMAN SCIENCES IN A MULTI-DISCIPLINARY TEAM would probably be enough. Or is this supposed to be a move to post-normal engineering (cf. post-normal science)?

There is the risk that the existence of these guidelines, in the absence of more specific material on implementation, puts the responsible engineer at risk in a post-accident situation. How is an engineer supposed to reconcile an obligation to ALARP with John Adams' evidence-based rants and Lord Young's idea of common sense? 'Challenging'. Courtroom hindsight will leave plenty of room for debate e.g. when are procedures 'over-elaborate'?

High-level management gets a mention - just. Governance does not. The word 'business' does not., nor does anything to do with finance. The references to open reporting and culture are fine, but these are often unlikely to be within the purview of an engineer - for example, one looking at a $100M shortfall in maintenance on a petro-chemical plant. What support is the Engineering Council going to give in such situations? My reading of the guidance is that it is putting the engineer in harms' way, rather than out of it. How are Vince Weldon situations to be addressed?

At a more mundane level, the principles should toll the death knell of the clerical approach to tending risk management databases. Given the scale of vested interest behind such an approach, engineers trying to end the atomised treatment of risk registers will need some serious back-up, and it is not obvious that the standards and regulations cited will do that.

The list of useful references is a single page, and I am sure there is a long wish-list on the cutting-room floor. My wish-list item would have been IRGC material - in particular, their Risk Governance (.pdf). Firstly, what is being asked for in the Engineering Council guidance is more at the level of governance than management. Secondly, the IRGC knowledge characterisation of types of risk problems seems very powerful, and could be readily implemented using the Cynefin framework.

The document is a missed opportunity to support lost opportunity risk and innovation. It would not really have helped the Nokia smartphone team in 2004 when their anticipation of the iPhone was turned down. The 'safe' option of high-level management doing nothing needs to be changed. This is discussed at the Argenta blog here.

Finally, it is as well to remember that “The Engineering Method is the use of heuristics to cause the best change in a poorly understood situation within the available resources”. Billy V. Koen